This post is more some written thoughts about the current programming ways and practices in APIs than some serious stuff about above mentioned language and framework. Training about Scala, Golang, Reactive Functional Programming, Parallel and Concurrent Programming, Apache Spark, etc. has been the seed to understand and most importantly to implement these best practices in enterprise projects.

The old times when it was said "Performance progtramming is not relevant: The real bottleneck is the database!" has (fortunately) passed. There is not any database to hide our low performance code as NoSQL, Micro-Services Architectures and Distributed Systems have solved this problem forever.

Besides, memory and concurrency handling are important for security as well. Microsoft security engineer Matt Miller said around 70% of all Microsoft's yearly patches were fixes for memory safety bugs. Fine grained memory handling languages like C++ offer a way to implement unsafe features. Other languages like the JVM languages require a careful handling of memory resources used in threads to minimize vulnerabilities.

The conclusion is, by improving concurrency and parallelism we are also improving safety. As programmers we have to pay attention to how concurrency and parallelism works in our apps to be sure they meet the security requirements.

Concurrency and Parallelism

Firstly, we'd like to distinguish between Concurrency and Parallelism concepts.

It's simple. In Concurrent Programming we use (for instance) a single CPU core. However, we have different consumers. To attend these consumers we are splitting the computing time of the CPU core in different processes/tasks. We always do only one thing at a time (remember we have only 1 CPU core) but we divide the CPU core time among different tasks. The consumers get the impression of several things are happening at the same time but from the CPU perspective only one task is processed at a time.On the other side, Parallel Programming is quite different. One more time, we have several consumers but in this case we have (fore instance) several CPU cores available. Each process/task is split in different threads that perform a specific operation. These smaller operations happen at the same time as the run in different CPU cores.

The natural conclusion is: "Well, it is clear. There are tons of multi-core CPUs. So, we have to use all of them all the time and our tasks will be executed much faster". It is not that easy. We have to consider several factors.

The Traditional Model

When processes are handled in the traditional model threads are the smallest computational units. In this model memory is shared as common variables are used for communication across different threads. Therefore, we can find in the traditional model a set of well known issues related to multi-threading management. Race conditions, starvation, deadlocks, livelocks, etc because the usage of this shared memory. You can find a simple description of these issues here.

The Traditional Model is used in C, Java, etc. Multi-threading had to be manually handled and frameworks like Spring did not improve things with a really poor way to manage multi-thread operations. Other languages (e.g. interpreted languages like Javascript and Python) do not have these issues as they are single-thread unable to manage several threads at the same time and hence, unable to perform parallel execution. Besides, thread-related issues are behind of most of the issues related to high latency and performance.

It was clear that new solutions regarding the Traditional Model were needed and this is the main reason behind the Reactive Functional Programming model (this classical introduction is a mandatory reading). We'll review a couple of use cases with Go and Akka in Scala. Only for your information, many others solutions raised in the last years as Vert.x. Anyway, we'll leave a post about RFP for a near future.

Thread-related issues are behind of most of the issues related to high latency and performance with classical frameworks and languages based on the Traditional Model.

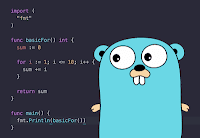

Going Forward with Go

The Go model handles concurrency by using goroutines. The idea behind goroutines is to allow the scheduling of different executions in concurrency in an easy way. Goroutines are not threads (are multiplexed to a smaller number of OS threads). The cost of creating new threads is huge compared with the cost of goroutines. Besides, goroutines can easily talk each other by using channels.Comparing goroutines and threads we can see the main differences:

- Goroutines are extremely cheap when compared to threads. They are just a couple of kb in stack size and the stack can grow and shrink according to requirements of the application, while in the threads, the stack size must be specified and is fixed.

- Goroutines are multiplexed to a smaller number of OS threads. We can find just a single thread in a program with thousands of Goroutines. If any Goroutine in that thread blocks, waiting for user input, at that point another OS thread is created and the remaining Goroutines are moved to the new OS thread, avoiding deadlocks. All these are taken care of by the Go run-time. For programmers, they are abstracted from this complexity by using a clear API to work with concurrency.

- Goroutines communicate using channels. Channels by design prevent race conditions from happening when accessing shared memory using Goroutines. Channels can be explained as pipes that use Goroutines to exchange information.

Let's see an example

package main

import (

"fmt"

"time"

)

func greetings() {

fmt.Println("Hello goroutine!")

}

func main() {

go greetings()

time.Sleep(1 * time.Second)

fmt.Println("main function")

}

You can run the code at Go Playground and you'll see what happens. Try out by removing the 1 second delay (time.Sleep(1 * time.Second)). Anyway this is a good explanation with basic examples about how goroutines work.

A practical usage of goroutines can be found in microservices. Imagine a microservice written in Go that starts a HTTP server to expose some REST web services. But this microservice also listens a queue to receive messages (it exposes HTTP and AMQP endpoints). Both processes run at the same time and they run without interruption (they are waiting for something all thge time). In this case Goroutine is useful to start both processes without any problem.

func main() {

...

logger.Infof("Invoking goroutine starting HTTP server.")

go startHTTPServer(configuration, gin.ReleaseMode)

logger.Infof("Initializing message bus service...")

<-time.After(time.Duration(configuration.MessageBus.Freq) * time.Second)

messagebus.SubscribeIncomingQueue()

}Communication across different concurrent operations is important. To synchronize the execution of Goroutines we use channels. A channel in Go is a communication object that Goroutines use to communicate with each other and therefore to synchronize executions. A Go channel is basically an I/O pipe. Therefore, a Goroutine can send data into a channel, while other Goroutines can read that data from the same channel. Clear, right?

package main

import (

"fmt"

)

func greet(c chan string) {

fmt.Println("Good Day " + <-c )

}

func main() {

fmt.Println("Starting main")

c := make(chan string)

go greet(c)

c <- "You"

fmt.Println("Stopping main")

}

It's easy right? The invocation to tge greet function happened when using a Goroutime. Despite of this we were be able to send a message as an argument to the function using the channel. Obviously this is a very basic example and channels can be used for complex synchronization and scheduling of Goroutines in your Go apps.

An Actor for each Task

We know Akka is based on the Actors Model. This model is based on the creation of computing containers with a State, a Behavior, a Mailbox (to receive information from other Actors), even with children and a supervisor with a given strategy. Actors are created from a model and many instances can be created. They are completely independent, isolated and therefore they do not share resources. Most importantly, Actors are immutable. An Actor can spawn other Actors (Children) with the same properties about isolation. Communication between Actors is always asynchronous and non-blocking.Akka is a framework for Scala and Java that implements the Actors Model.

class ChildActor extends Actor{

def processMessage(msg: String): String ={

msg.toUpperCase

}

def receive = {

case String => sender ! processMessage(msg)

case Error => throw new Exception

}

}Now, we can use this actor by using different ways:

import akka.actor.ActorSystem

import akka.pattern.{tell, ask}

import actor.Actor.{ActorRef, Props}

object Sample extends App {

val system = ActorSystem(“System”)

val actor = system.actorOf( MyActor.props)

Actor ! “Hello World”

Actor ? “Hello World” //You have to handle the async message here

}

This is the plain case. You can see how we can send messages to the actor using the mailbox.

What if you are receiving hundreds of messages every minute into a streaming API endpoint? Well, Akka is specially brilliant in this kind of situations. A new actor will be created to be processed in an isolated and parallel way. The only limits are the CPU capabilities and available system memory.

The programmer has to be smart enough to handle resources, timeouts, to get metrics from average duration and cost of a single operation in order to manage the estimation for appropriate amount of resources and expected reliability. You can be sure your memory and CPU cores will be used if they are needed with not idle resources when your app is starving because of the lack of resources.

Conclusions?

- For us the Traditional Model about computing resources is not useful any longer as we have to work in C loud Native environments using distributed apps deployed to Kubernetes clusters. This model is not acceptable.

- In the past low latency apps were built by specialized senior teams with a huge cost. Despite of this, the results were never good enough.

- The Non-Blocking model is the key. Different languages and frameworks are focused on providing concurrent and parallel computing features out of the box.

- Golang (as a programming language) and Akka (as the reference framework for the Actors Model in the JVM) are key references to obtain low latency apps with a low cost and an effective life cycle and duration.

Comments

Post a Comment